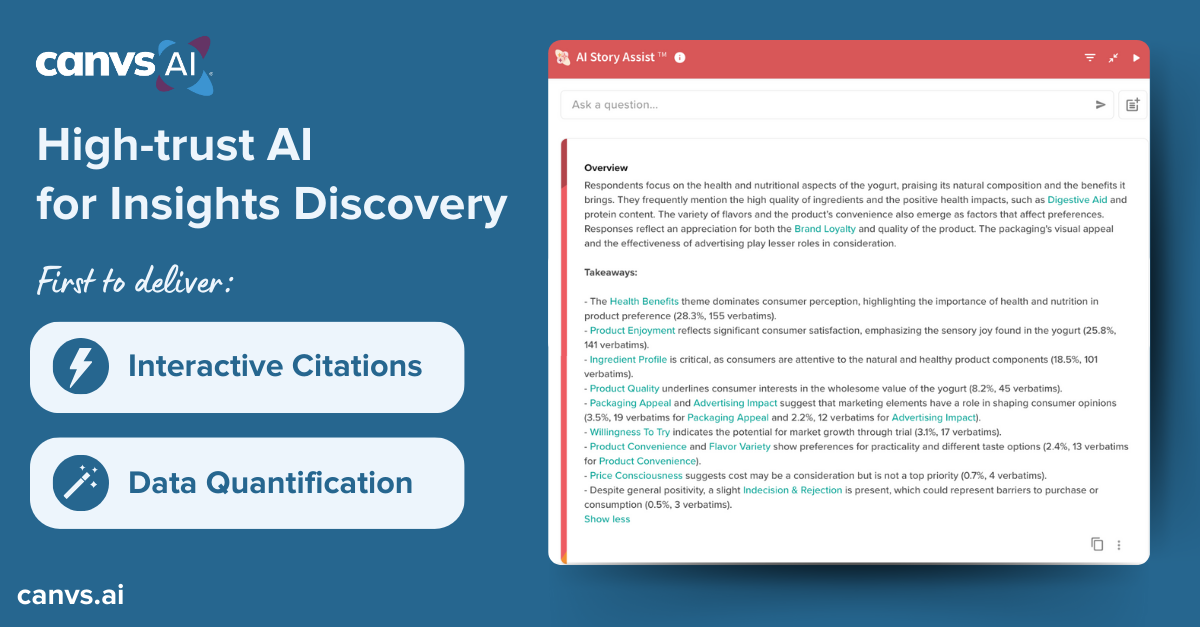

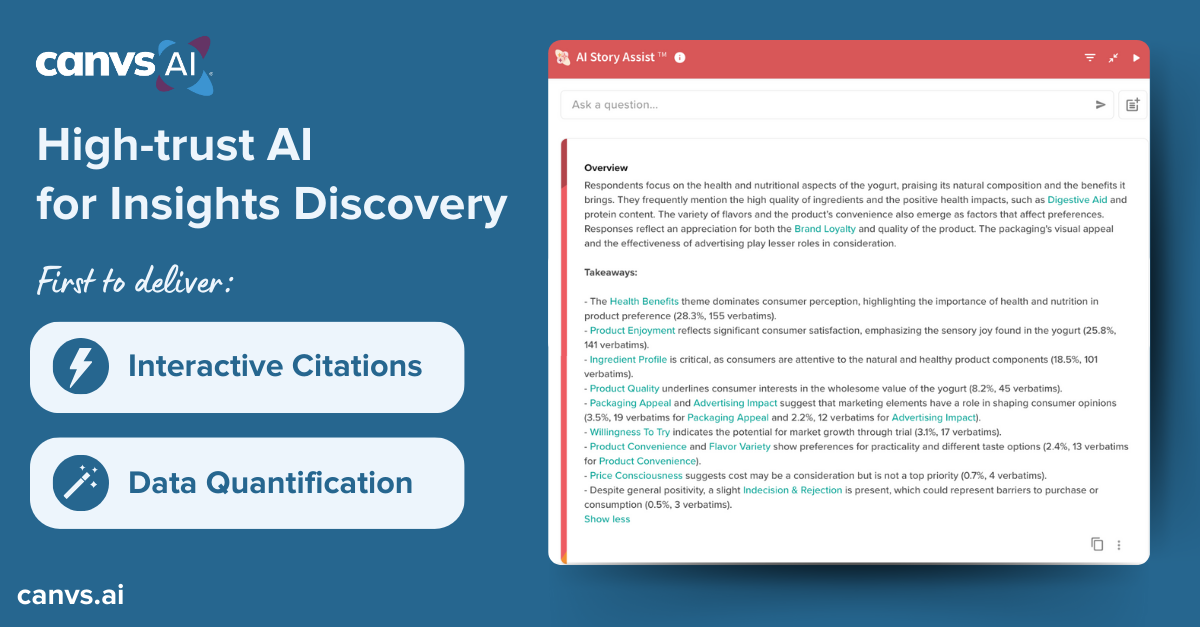

New AI Story Assist™ Executive Summary Includes Interactive Citations and Instant Data Quantification, Boosting Insights Productivity and Confidence

April 11, 2024 — Canvs AI, the leading insights platform built for text analysis, advances high-trust AI for insights discovery with the latest release of AI Story Assist™. The latest release introduces a new executive summary overview incorporating interactive citations and quantification of the themes, topics and emotions identified in open-ended text data. This high-trust approach adds transparency and efficiency for Insights and CX teams, allowing them to take a “trust, but verify” approach to using generative AI for insights.

Join our Special Product Tour Webinar

Join Canvs AI Founder & CEO Jared Feldman for a special launch event on April 18th at 1 PM ET to see a demo and learn more about our approach to high-trust AI.

Canvs AI delivers a purpose-built insights platform uniting generative AI with the quantification of themes, topics, and emotions in unstructured text from any source. AI Story Assist also allows researchers to ask their data natural-language questions and drill into quantifiable analysis to further explore and validate their data-driven story narratives. Over 50,000 business questions have been ask and answered by AI Story Assist since its launch over a year ago.

While generative AI has captured the imaginations of consumers and brands to boost productivity, concerns linger over the accuracy of outputs. As Ethical Machine Author Reid Blackman, PhD, writes in Harvard Business Review, “One significant risk related to LLMs like OpenAI’s ChatGPT, Microsoft’s [Co-Pilot], and Google’s [Gemini] is that they generate false information.”*

“Trust is the number one barrier to realizing the incredible potential of generative AI for consumer insights – insights leaders need to understand the source of any AI-generated insight to trust their reputation on it,” said Kristi Zuhlke, principle of Generative AI for Insights. “With its latest release, Canvs AI sets the bar for high-trust AI by incorporating interactive citations and quantification, along with full transparency to the source material. This is exactly the approach insights teams need to make AI a core part of their workflows.”

Since the introduction of its insights platform in 2018, Canvs AI has prioritized trust, transparency, and privacy within AI capabilities. With its latest release, Canvs AI reaffirms its commitment to a transparent, high-trust AI experience across four key areas of the platform:

- Interactive citations: Enhances transparency and lets researchers verify the authenticity of AI-generated summaries and takeaways.

- Closed-domain approach: Ensures data privacy and guarantees that all outputs originate solely from your data source.

- Connected analysis: Provides the efficiency of generative AI with robust insights discovery tools for deeper, more insightful analysis.

- Clear, actionable insights: Equips researchers to swiftly and confidently deliver action-oriented insights to stakeholders, regardless of their expertise level.

“Without trust, insights generated from AI are worthless. That’s why Canvs AI has prioritized transparency in our AI-powered insights platform from day one,” said Jared Feldman, Founder & CEO of Canvs AI. “This release marks an industry milestone in high-trust AI for insights, building citations directly into the AI Story Assist executive summary generated on every project, along with the ability to ask custom questions and drill into quantified analysis of open-ended feedback.”

About Canvs AI

Canvs AI is the leading insights platform for transforming open-ended consumer feedback into actionable business intelligence using high-trust, AI text analysis. Canvs is used by some of the world’s most admired brands to accelerate time-to-insights, reduce cost, and deepen their understanding of consumers. For more information or to request a customized demo, please visit canvs.ai or email sales@canvs.ai.

*Blackman, Reid, Generative AI-nxiety, Harvard Business Review, August 14, 2023, https://hbr.org/2023/08/generative-ai-nxiety