For consumer insights professionals, the promise of generative AI is clear - enhanced efficiency through automation and elevated data storytelling capabilities. Being able to quickly and seamlessly translate vast amounts of unstructured consumer feedback data into polished insights has obvious operational benefits. From dramatically reducing time spent reading through verbatim comments, to surfacing thematic trends and emotional undercurrents, to generating compelling data-driven narratives, generative AI could supercharge productivity across the entire insights workflow.

The potential is tantalizing. Imagine simply uploading datasets of survey responses, consumer reviews, social media data, and more. Then an AI assistant automatically reads and comprehends all of it, identifying key themes, trends, and driving consumer emotions. It then synthesizes those findings into crisp insights recommendations, complete with compelling data visualizations - all in a fraction of the typical analysis time frame.

For an industry often bogged down in tedious data wrangling and manual coding of unstructured text, these AI capabilities can feel...well, magical.

But of course, that sense of AI magic is a double-edged sword for insights professionals. Our profession's entire value depends on providing rigorously data-driven, credible strategic recommendations that leadership teams can trust implicitly. Any hints of potential bias, inaccuracy, or hallucination in an AI system's insights can quickly erode confidence and negate any imagined productivity gains.

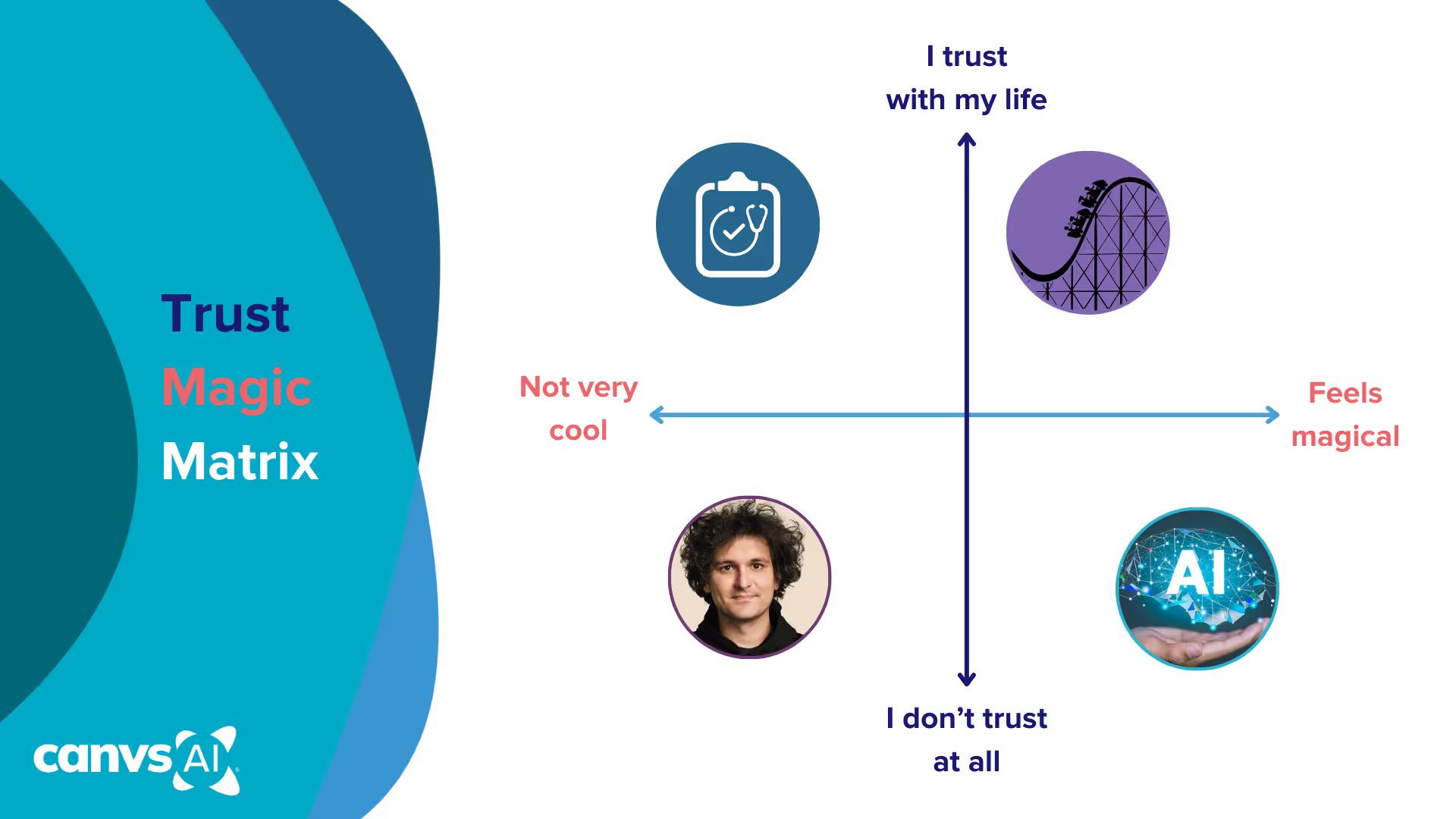

This dichotomy between the desire to harness generative AI's magical capabilities and the need to maintain high standards of trust is what I call the "Trust-Magic Matrix." The x axis is the perception of magic, while the y axis represents the level of trust (figure A). We might put our accountant or doctor in the upper left; not magical, but highly trustworthy. A thrill ride at Disney might be in the upper right quadrant; it’s magic and we trust it with our lives. On the other hand, a character like Sam Bankman-Fried or email scams are neither magical nor trustworthy.

Most current AI applications unfortunately sit in the "magical but low trust" quadrant. The capabilities are incredibly impressive, but there are still too many "hallucinations" and potential inaccuracies to go all-in just yet.

At Canvs, we're on a mission to elevate generative AI out of that quadrant and into a zone of high trust and high magic for insights leaders. It's an ambitious goal - insights pros are a naturally skeptical bunch who rely on data-driven evidence over hype. That's why we've been laser-focused on developing high-trust AI capabilities you can actually bet your business on. Our AI Story Assist is designed as a true "trusted insights copilot" transparently showing its work, quantifying insights by citing the underlying data sources.

In the past year alone, Canvs customers have utilized AI Story Assist to ask over 40,000 business questions from their data and dig deeper into automated insights. Crucially, it operates as a closed-domain system, drawing only from your proprietary data, not writing fictional narratives.

While other AI tools are overwhelmed when faced with high data volumes, our AI insights engine is built for enterprise scale and speed. It can analyze millions of inputs instantly - a feat that would take human teams months or be practically impossible.

AI Story Assist combines cutting-edge large language models with customizable rules, human-in-the-loop verification, and transparent data citation to infuse trust into the AI-powered insights generation process. The system can rapidly identify key themes, trends, and emotions across all of your unstructured text data. But crucially, it backs up high-level insights with crystal clear data citations mapped back to the original consumer verbatims.

Ultimately, high-trust AI is about having an intelligent, transparent co-pilot amplifying your insights capacity. One that makes you smarter and more efficient, not one that tells you to disregard decades of institutional knowledge by blindly trusting a black box.

As insights leaders evaluate AI solutions, they must prioritize six key criteria of trusted AI:

- Transparency

- Quantification methodology

- Proprietary, closed-domain data focus

- High-volume scalability

- Iterative question-asking

- Blazing fast speeds.

Anything less risks undermining confidence in your insights altogether.

The AI revolution is here for consumer insights. But it will only fulfill its transformative potential if we approach it in a manner upholding rigorous standards of trust and reliability. At Canvs AI, that's been our guiding philosophy from day one.